This conference is organised by PhD students at the Department of Digital Humanities.

11 June 2024 – King’s College London, Strand Campus (Bush House Lecture Theatre 1)

Interdisciplinary Perspectives: Bridging Sociological Studies in the Digital age

The Department of Digital Humanities at King’s College London is pleased to host the international conference Interdisciplinary Perspectives: Bridging Sociological Studies in the Digital Age on 11 June 2024. The conference will be in-person only and will take place in London, at King’s College London Strand Campus (WC2R 2LS), where the Department of Digital Humanities is based.

The event aims to foster discussions and collaborations across various disciplines, specifically including Natural Language Processing (NLP), Social Sciences, and Social Media and Digital Methods in broader research in Digital Humanities.

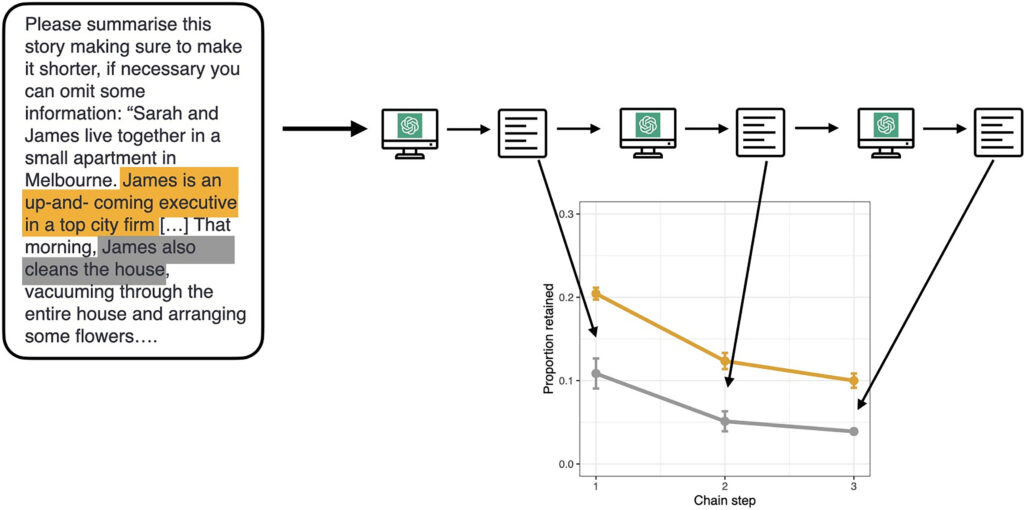

In an era defined by unprecedented connectivity and vast digital datasets, the intersection of NLP, Social Sciences, and Social Media alongside Digital Methods sparks a profound dialogue, reshaping our understanding of human behavior and societal dynamics within vast digital ecosystems. NLP emerges as a transformative force, equipping computers with the ability to comprehend, interpret, and generate human language, bridging the gap between digital data and human insights. This integration with Social Sciences fosters a multidisciplinary framework, enabling nuanced analyses of cultural phenomena, societal trends, and individual behaviors within digital realms. Concurrently, Social Media platforms emerge as dynamic arenas, offering real-time glimpses into human interactions, preferences, and sentiments, thus serving as invaluable sources for both NLP-driven research and social science inquiry. Through this interconnected dialogue, researchers navigate the complexities of the digital landscape, uncovering layers of meaning and driving innovation at the nexus of technology, social sciences, and digital methods.

The conference will feature Andrea Nini (Manchester University) and Chiara Amini (UCL) as keynote speakers. They will offer insights into the latest advancements, challenges, and future directions within their respective fields, enriching the interdisciplinary dialogue and inspiring attendees to explore innovative avenues at the intersection of sociological studies and the digital age.

For this conference, we invite submissions of abstracts in English focusing on the intersection of sociological studies and the digital age.

Abstracts should be tailored for presentations lasting 20 minutes, with an additional 10 minutes allotted for questions. We particularly welcome submissions in the following areas or any intersection between them:

- NLP

- Social Sciences

- Social Media and Digital Methods

We encourage submissions from researchers, scholars, practitioners, and postgraduate researchers who are exploring innovative approaches, methodologies, and findings within these domains.

Submission Guidelines:

- Abstracts should not exceed 300 words in length.

- Abstracts should include the name of the author(s) and their affiliation(s).

- Abstracts should clearly outline the research objectives, methodology, and, possibly, anticipated contributions to the field.

- Abstracts must be submitted via email to andrea.farina[at]kcl.ac.uk by 20 April 2024.Please write “ABSTRACT Interdisciplinary Perspectives Conference” as the object of your email.

- Please indicate the preferred topic area for your presentation (NLP, Social Sciences, Social Media and Digital Methods).

- Notification of acceptance: 10 May 2024.

For inquiries and further information, please contact Andrea Farina at andrea.farina[at]kcl.ac.uk.

The organisers:

Andrea Farina, Rendan Liu, Chiara Mignani, Zhi Ye

Conference Contact: andrea.farina[at]kcl.ac.uk