by Paul Schütze

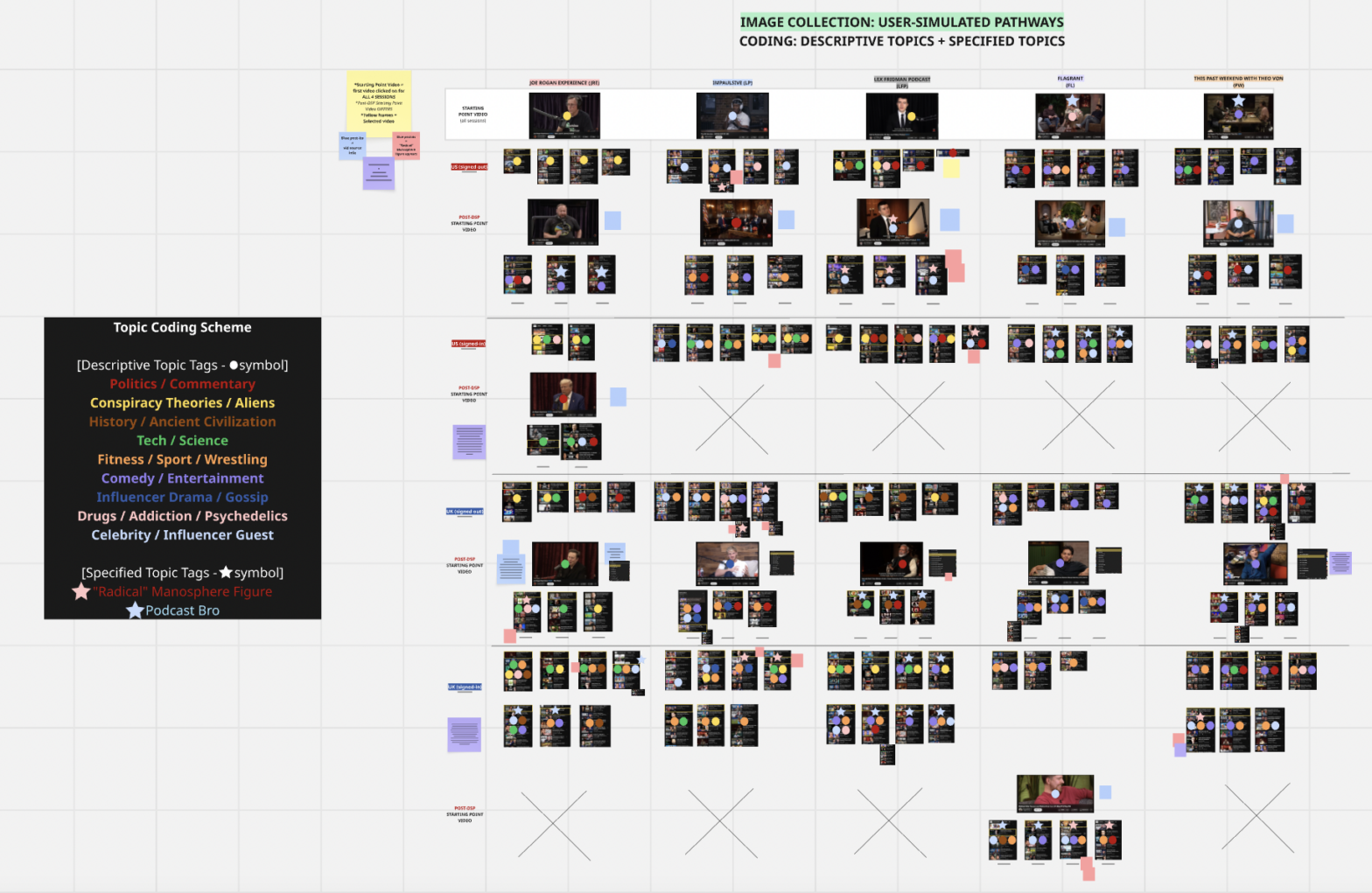

I am currently a Visiting Senior Research Fellow at the Department of Digital Humanities, King’s College London. As part of that visit, I recently gave a talk titled “A Social Critique of AI amid the Climate Crisis.” In that talk, I argued that AI is more than an environmentally costly technology. It is a system and an ideology that keeps extraction going and makes the current socio-economic dynamics seem inevitable. From this perspective, climate apathy is not a political failure. It is the systemic outcome of an AI-driven social order.

What I have laid out here is a pointed version of my argument, without the full theoretical scaffolding underneath it. If you are interested in the longer version, I am genuinely happy to talk through it. Please reach out!

But what does a social critique of AI actually mean?

There is a perspective on the AI-and-climate connection that most people have heard by now. Data centres consume enormous amounts of energy. Training large models releases tons of CO₂. The hardware for AI models to run on requires the mining of rare earth minerals under brutal conditions. All of this is true, and all of this is important.

But, what I call a social critique of AI goes beyond this perspective. This is because, beyond the environmental impact, there lingers an underlying question: why does any of this continue to happen? If we know the climate effects of AI (and other technologies for that matter), why do we not change it?

Simply put, the AI-and-climate connection is not an epistemological issue, it is not an issue of having too little knowledge. AI does not only have an environmental footprint problem. This would be a flaw that could be fixed with a different technological design. Run the data centres on renewable energies. Build more efficient chips. Regulate the emissions of model training. All easy solutions. Yet, while this may be helpful in some regard, these apparent solutions do not solve the underlying problem. The extractive order simply keeps going.

A social critique of AI begins by refusing this story. No better design, no further knowledge, and no greener infrastructures can get us out of this crisis. A social critique insists on looking at AI not as a technology with some negative side effects. But, it understands AI as a system embedded in a specific socio-economic order, which is built on the logics of extraction, control, and apparent efficiency. This very system will not fix the climate crisis. The climate crisis is not a malfunction of that system. It is a structural feature.

Understanding AI in terms of a social order

Once you refuse this story, and once you shift the frame, AI’s climate impact starts to look different. With this, we can now conceptualise AI as an ideological apparatus – an assemblage of stories and assumptions, of material practices and institutions – that makes the current social order feel natural and inevitable.

AI as an ideological apparatus works like this: It tells us that optimising technological systems is progress. That efficiency is inherently valuable. That data-driven decisions are superior. That technological innovation is the primary driver of human well-being. Yet, these are very specific claims that serve very specific interests. They reproduce what is already there. They make the current order feel permanent and even desirable. They make alternatives feel naive and impossible. This ideological function is what makes AI so damaging in the context of the climate crisis. AI does not just skyrocket emissions. It deepens the conditions that prevent any serious climate action.

This is the key point of a social critique of AI amid the climate crisis. We are on a trajectory toward three degrees of warming by 2050. Three degrees means ecosystem collapse, food system failure, large parts of the planet becoming uninhabitable. AI keeps these realities at bay, just enough that it does not feel necessary to actually confront this catastrophic reality.

A social critique refuses to accept that comfort. It calls out the underlying structures that produce the climate crisis. It paints AI as an integral part of these structure. It insists on facing the reality rather than retreating into the ideological narratives that make business-as-usual feel acceptable.

This is why a social critique of AI amid the climate crisis is important. The goal cannot simply be to design a better AI ethics framework or to improve the efficiency of data centres. The aim cannot be to propose the right carbon tax or the right AI regulation. But, we need to make visible the systems that keep (re)producing this outcome. We must trace how AI is embedded in and amplifies these very systems, and we thereby must refuse the stories that make all of this seem inevitable. AI is helping to build a world in which saving the planet means something entirely different from what it would actually require. Making this visible is the aim of the social critique I propose here.