Event organised by the Computational Humanities research group.

To register to the seminar and obtain the link to the call, please fill in this form by Monday 18 March 2024.

20 March 2024 – 12pm GMT

Remote – Via Microsoft Teams

Alberto Acerbi (University of Trento), Large language models show human-like content biases in transmission chain experiments

Abstract

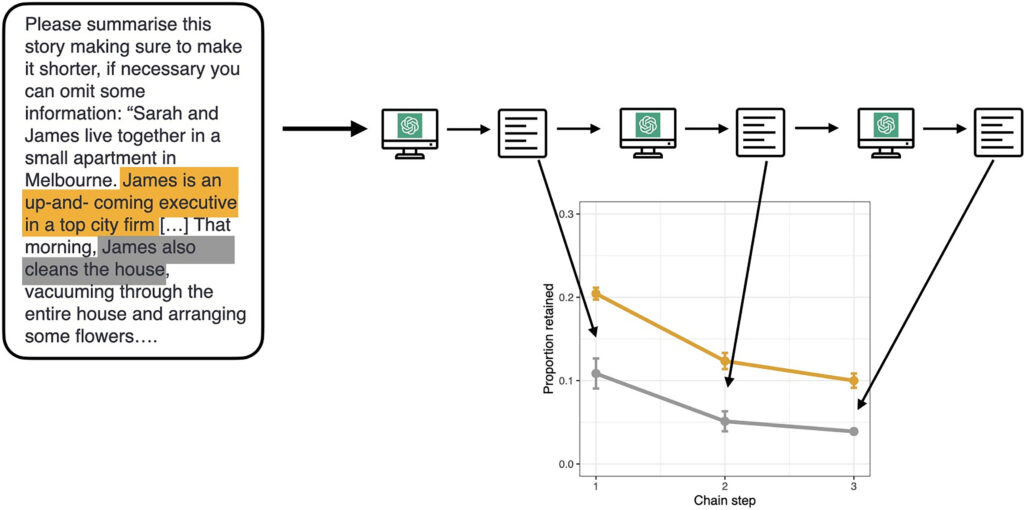

As the use of Large Language Models (LLMs) grows, it is important to examine if they exhibit biases in their output. Research in Cultural Evolution, using transmission chain experiments, demonstrates that humans have biases to attend to, remember, and transmit some types of content over others. In five pre-registered experiments with the same methodology, we find that the LLM ChatGPT-3 replicates human results, showing biases for content that is gender-stereotype consistent (Exp 1), negative (Exp 2), social (Exp 3), threat-related (Exp 4), and biologically counterintuitive (Exp 5), over other content. The presence of these biases in LLM output suggests that such content is widespread in its training data, and could have consequential downstream effects, by magnifying pre-existing human tendencies for cognitively appealing, and not necessarily informative, or valuable, content.

Bio

I am a researcher in the field of cultural evolution. My work is at the interface of psychology, anthropology, and sociology. I am interested in particular to contemporary cultural phenomena, and I use a naturalistic, quantitative, and evolutionary approach with different methodologies, especially individual-based models and quantitative analysis of large-scale cultural data. Currently, I focus on using a cultural evolutionary framework to study the effects of digital technologies, and I wrote a book for Oxford University Press: Cultural evolution in the digital age.

I am an Assistant Professor in the Department of Sociology and Social Research at the University of Trento, and member of the C2S2 – Centre for Computational Social Science and Human Dynamics.